AI-Driven Autonomous Rule Tuning with Synthetic Test Data

What if you could generate realistic test values on demand, without using real data, that automatically fine-tuned your detection rules?

Traditional rule optimization is a manual nightmare.

You start by finding an appropriate dataset. Then you create a regex or another type of hardcoded pattern recognition rule. Next, you handcraft a handful of test cases, tweak keywords based on intuition, check your results, tweak again, and finally, deploy, only to discover that a few new formats have slipped through the cracks.

But imagine being able to generate fully tagged, diverse datasets used to learn recognition patterns, create detection rules and refine them, with continuous deployment and optimization.

All of this is possible, and with minimal to no human intervention, using widely available and well-known LLM tools and APIs.

In this blog, we’ll explain how we solved these challenges using LLMs and explore the use cases enabled by this approach.

The Problem: Manual Optimization is an Endless Trap

Picture this: you’re tasked to optimize a firewall rule to catch suspicious domains.

You start with the simple pattern:

\b(no-ip\.com|duckdns\.org|\.zip)\b

And it correctly flags any query containing those exact strings.

Then you discover adversaries slipping through by changing a single character or using punycode, for example:

n0-ip.com(zero instead of “o”)duckdnsr.org(extra “r”)

So you loosen your pattern to allow for one-character typos and punycode prefixes. But now, not only are you getting false alarms on legitimate domains that happen to look similar - your production environment is also suffering from inefficient blocking and filtering - and your regex keeps growing more complex:

\b(?:[nN0]-?ip\.com|duckdnsr\.org|(?:xn--)?[a-z0-9\-]+\.com|...[zip])\b

Every tweak feels like guess-and-pray, because you have no large, representative dataset to validate against and no visibility into production traffic - only a handful of handcrafted examples. After days of iterating you end up with something that “works well enough” on your tiny test set, but little to no confidence in how it will behave in the real world.

Now let’s zoom out to the core issue: the lack of diverse, tagged datasets to train and validate our detection rules. Gathering reliable, pre-labeled data is widely recognized in the data community as one of the biggest obstacles - not just for scenarios like this, but across countless detection challenges.

We were tired of this guessing game.

The Solution: AI-Driven Autonomous Rule Tuning with Synthetic Test Data

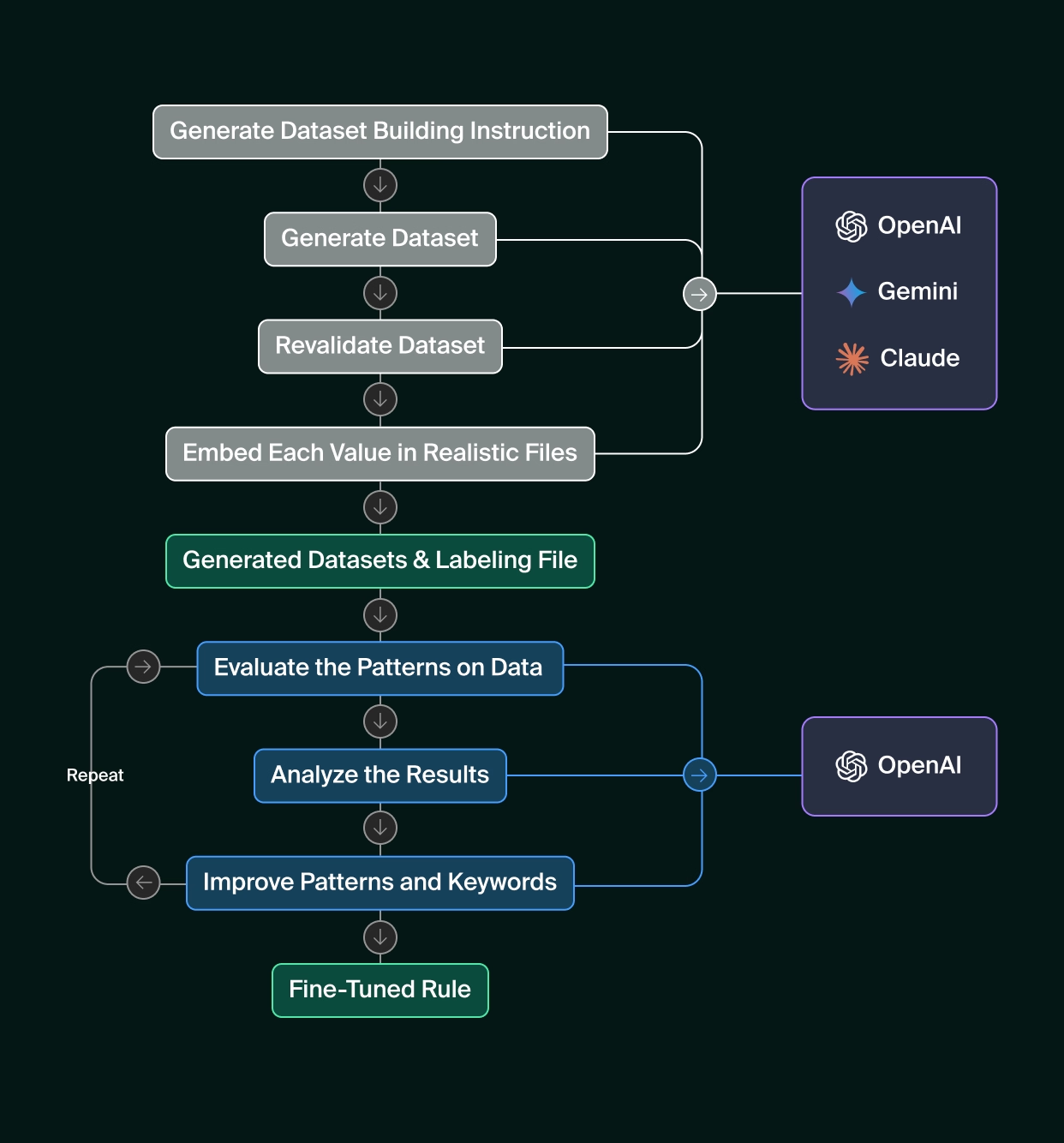

We replaced that endless tweak-test-deploy loop with an autonomous AI engine that works in three steps.

First, a dedicated model (Model A) generates huge, fully tagged synthetic datasets on demand - with the goal of covering every edge case, variation, and encoding trick you can imagine - and embeds them into realistic, file-like artifacts (Packets, logs, JSON, document snippets, etc.), while simultaneously producing a corresponding ground-truth label file that maps each value to its correct tag.

Second, a separate model (Model B) uses off-the-shelf LLM APIs to propose, test, and score each candidate rule or detector quantitatively (precision, recall, false-positive rate) against those files - and even revalidates the leading patterns against other AI tools to catch blind spots while minimizing overfitting.

Finally, it iterates automatically - refining your patterns or configurations until your performance metrics hit predefined targets - and then ships only the battle-tested static rules or configurations. No more hand-crafting test cases, no private data, no bespoke model training or hosting. What was once an art of guess-and-pray becomes a reproducible, data-driven science (and art).

Our Strategy: Static Rules, Dynamic Optimization

In the planning phase of the project, building a custom model that can run on production data sounded tempting, but a hard look at engineering trade-offs made the decision easy.

Our choice: off‑the‑shelf LLMs for offline tuning and static patterns for production

*While we’re aware of many emerging approaches in the world of LLM-based detection, we chose to focus this comparison on just two: off-the-shelf LLMs (used offline for tuning) and custom-trained LLMs served live in production. After evaluating our system’s architecture, capabilities, timeline, and specific needs, these two options emerged as the most relevant and viable for our case. Other approaches, though promising in different contexts, didn’t align as closely with our constraints or objectives.

In addition to the points above, for our specific case, we also considered additional cost topics (ongoing GPU-hosting and retraining) as well as operational safety (drift, warm-up failures, or unexpected version changes, etc.), after taking into account the full range of our needs and the available options, we decided to go with offline rule tuning using of the shelf LLM solutions.

In short, off-the-shelf LLMs give us rapid iteration and deep language coverage during optimization, while static patterns preserve the speed, transparency, and incident-level traceability we need once the rules are live.

The Turning Point: Let AI Handle Both Data and Tuning

Synthetic Data: Diverse, Relevant, and Comprehensive

Since no public datasets existed and policy barred the use of production data, we turned to LLMs as the most cost-effective, practical solution. Our system now produces fully tagged test sets that include:

- Valid examples: Legitimate values for each use case

- Invalid examples: Benign inputs that might trigger false positives

- Edge cases: Unusual variants (e.g., special encodings, subdomain shifts, irregular IDs, atypical payment patterns)

- Adversarial cases: Obfuscated inputs crafted to evade detection

- Contextual variations: Values embedded within realistic file artifacts

This yields rich, comprehensive coverage at a fraction of the cost.

The core effort lies in LLM-driven generation and in validating the integrity and reliability of the synthetic data - a topic we’ll explore in depth later.

Because LLMs can systematically explore the full space of variations, our AI-generated datasets often exceed what human testers devise or what public data sets can offer.

Once we saw how effectively the model created challenging test cases, the next step became obvious: if it can generate the problems, why not let it suggest the fixes? We therefore wrapped a second AI-driven loop around the corpus - an autonomous agent that handles both test-data generation and rule refinement end-to-end.

The Optimization Agent: Tireless and Objective

We introduced a dedicated module in our architecture to create, analyze, and fine-tune rules automatically against predefined quality thresholds - no human intervention required. Its primary responsibilities are:

- Analyze failures: Identify which synthetic test cases the current rule misses or misclassifies

- Choose strategy: Decide whether to tweak the regex pattern, adjust keywords, or both

- Generate improvements: Leverage LLMs to propose concrete pattern and keyword modifications based on quantitative feedback

- Test changes: Validate each proposal against the full synthetic dataset - and even across different AI providers - to guarantee robustness and catch provider-specific quirks.

- Accept or reject: Make objective, data-driven decisions by measuring score improvements

No more hand-crafting edge cases, no more guesswork with regex. Just continuous, AI-driven refinement powered by metrics, not intuition.

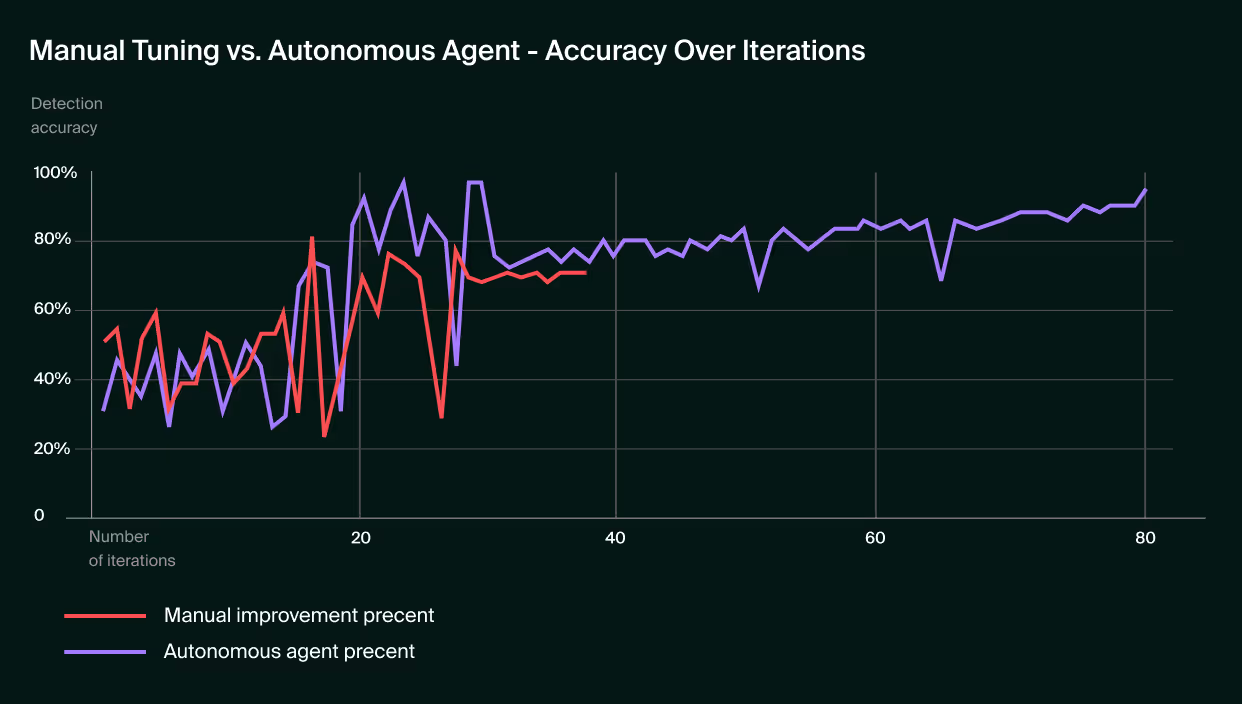

The difference between manual tuning and autonomous improvement becomes especially clear when looking at their performance over time:

The Scoring System: Turning Gut Feelings into Math

We mentioned before that the rules are tested and scored until a predefined threshold is met. Practically, We replaced guesswork with a numeric score: every true-positive, false-positive, false-negative, and true-negative contributes a weighted value to a single metric.

Because the weights live in code - not in somebody’s head - the optimization agent can:

- Compute the exact impact of any rule change.

- Compare alternatives automatically.

- Promote only the variant that improves the score.

In other words, the AI never “hopes” a tweak is better - it proves it before anything ships.

The elephant in the room: LLM reliability

While LLMs unlock powerful pattern recognition, they are far from infallible. To build confidence in an AI-driven tuning pipeline, we layered multiple safeguards:

- Model Comparison & Iterative Runs

One of our key insights was that rules optimized against a single LLM often perform poorly when evaluated by different AI systems. A pattern that works great with OpenAI might fail miserably with Claude or Gemini. So we built cross-validation directly into the optimization loop. Every rule candidate is tested against OpenAI, AWS Bedrock, and Google Gemini (or any other platform we choose). Only patterns that perform consistently across all providers and that withstood the other tests make it to production. This catches subtle edge cases that single-provider optimization misses and ensures our rules are robust across different AI evaluation engines. - Human-in-the-Loop Validation

At each development milestone, engineers manually inspect a representative sample of AI suggestions - spotting hallucinations, correcting misclassifications, and refining prompts - to ensure every proposal meets our quality expectations. By applying manual validation early in the development process, we guarantee that each module’s prompts and validation workflows remain reliable and trustworthy. Once this validation is complete, the agent takes over entirely, running fully autonomously and iterating without any further human intervention. - Ground-Truth Benchmarking

Whenever labeled examples exist - whether from public data or a small hand-curated set - we measure each model’s outputs against those ground-truth tags. This quantitative feedback drives our selection of both models and prompts. - Rigorous Prompt Engineering

Crafting prompts is a first-class task. We always aim to write crystal-clear instructions that account for each model’s quirks - its blind spots, token limits, and domain strengths - so the AI plays to its advantages and avoids common failure modes. - Comprehensive Logging & Audit Trails

Record every input, model prompt, output, and chosen action so you can forensically reconstruct exactly how a decision was made, analyze the data externally, and identify the most efficient, targeted ways to address any issue. - Detector Analysis

As an additional validation step, we compare different types of detection mechanisms (e.g., regex patterns, keyword matching, and specialized logic) against one another. This allows us to identify outliers-which, based on our observations, should be minimal to nonexistent in most cases, and flag them for further in-depth analysis by our team to fix or rule out any possible reliability issues.

We recognize that these steps demand significant effort - multiple LLM evaluations, human reviews, baseline tests, and prompt refinements. Yet, by investing here, we gain much more confidence with every rule the system ships - and that reliability payoff makes the extra work more than worth it.

Conclusions

At Island, we faced the familiar challenge of end-to-end data gathering, rule tuning, and detection. By leveraging well-known, publicly available LLM APIs, we built both a data-generation engine and a rule-creation/evolution pipeline that deliver:

- Rapid rule-tuning improvements in production

- High precision and availability

- Minimal effort and cost

Combined with automated deployment, this approach can power continuously optimized detection systems across the data industry.

Of course, using LLMs demands rigorous validation and reliability safeguards to ensure trust and reliability. But when done right, it unlocks a host of benefits:

- No human bottlenecks after the initial confidence checks the agent iterates on its own, faster than manual tweak-and-test cycles.

- Objective decisions Changes ship only when metrics improve - no gut feelings.

- Extensive edge-case coverage Synthetic datasets surface variants that human testers would rarely consider.

- Production safety No production data is ever shared with AI tools, online services, or human reviewers, guaranteeing full data privacy.

- Easy debugging Final output is a deterministic rule, with minimal to no black boxes as in other available options.

Accessible to any engineer No ML expertise required; the complexity stays behind API calls

Instant Rule Optimization - Today’s Reality

Let's go back to the beginning of this blog: you’re tasked with tuning a firewall rule to catch suspicious domains. You feed a rough regex into our system - and five minutes later, it returns a battle-tested pattern, complete with optimized keywords and validation settings you’d never have crafted alone. That rule has already been hammered against thousands of edge cases and vetted across multiple AI engines. What once felt like science fiction is now our day-to-day.

.svg)

.svg)

.svg)